In today's rapidly evolving data landscape, businesses are constantly seeking ways to optimize their IT infrastructure, reduce complexity, and enhance operational efficiency. Storage, a critical component of any IT environment, often presents a significant challenge. Traditional storage solutions typically involve separate systems for block-level data (SAN - Storage Area Network), file-level data (NAS - Network Attached Storage), and object storage leading to siloed environments, increased management overhead, and higher costs. But what if you could consolidate these disparate systems into a single, unified platform?

This guide will show you how to conquer storage complexity and why a unified SAN, NAS and Object Storage solution is the key to a more efficient, powerful, and streamlined IT environment.

Understanding SAN, NAS and Object: The Traditional Divide

Before diving into the benefits of unified storage, let's briefly define the three primary storage architectures:

•Storage Area Network (SAN): A high-speed network that provides block-level access to data. SANs are typically used for applications requiring high performance and low latency, such as databases, virtualized environments, and mission-critical applications. Data is accessed as raw blocks, similar to how a local hard drive is accessed.

•Network Attached Storage (NAS): A file-level data storage server connected to a computer network that provides data access to a heterogeneous group of clients. NAS is ideal for file sharing, file collaboration, archives. Data is accessed as files and folders over standard network protocols like NFS or SMB.

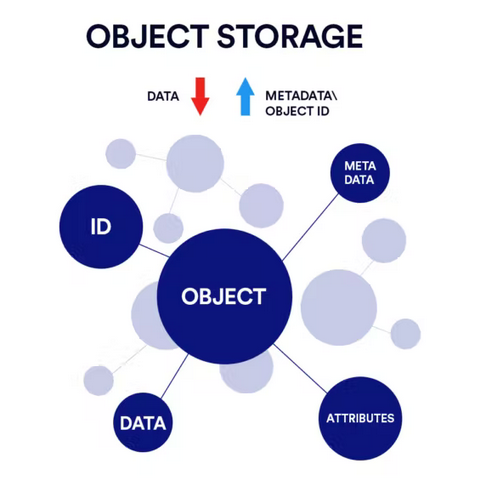

•Object Storage: Object storage stores data as objects instead of files or blocks. Each object has data, metadata, and a unique identification for easy retrieval. Object storage is flat and scalable. Mainly used to store and access unstructured data such as video and audio files, IoT data, etc.

Want a quick primer? See NFS and SMB background reading on Wikipedia: NFS andWikipedia: SMB.

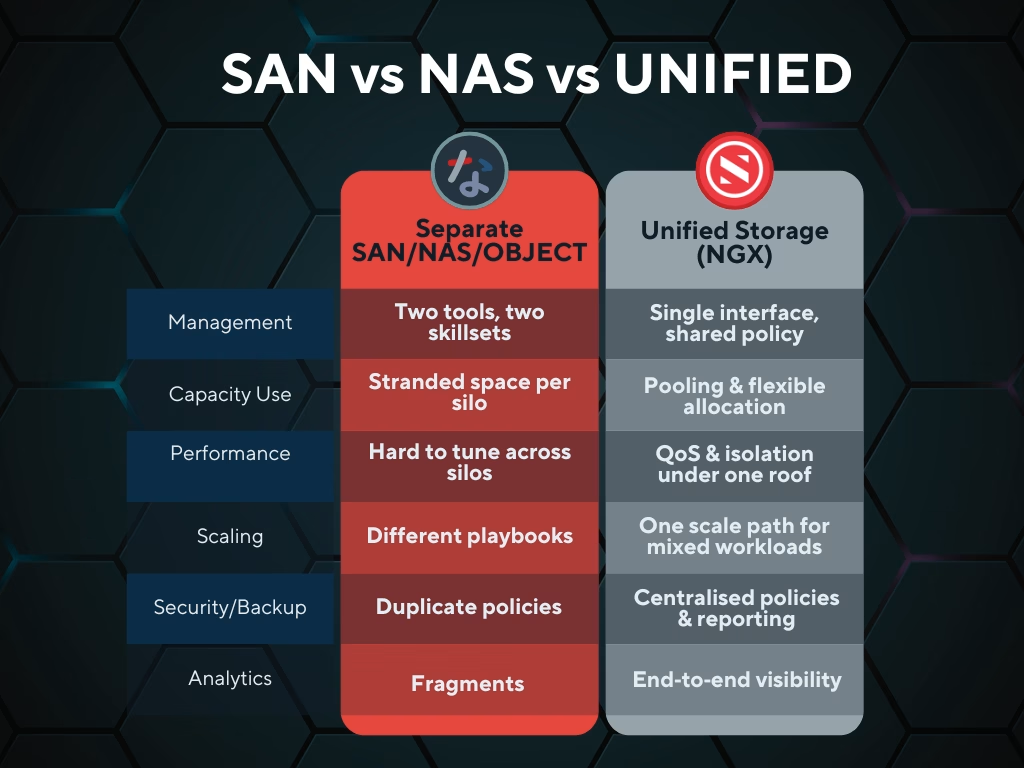

Why Separate SAN, NAS and Object Storage Systems Are Holding You Back

Traditionally, organizations would deploy separate SAN, NAS and object storage systems to meet their diverse storage needs. This often resulted in increased hardware costs, complex management interfaces, and inefficient resource utilization. As data demands grow, this legacy approach creates more problems than solutions. Here are the reasons why it's time to rethink:

- Increased Hardware & Capital Costs

Maintaining distinct SAN, NAS and object storage systems often means duplicated infrastructure—extra servers, storage units, and networking gear. This drives up CAPEX and leads to underutilized assets. - Complex Management & Silos

Each system typically comes with its own management interface, requiring specialized skillsets and additional training. Admins waste time toggling between platforms, increasing the risk of errors. - Inefficient Resource Utilization

Resources are locked into separate storage pools. When one system is underused and the other is at capacity, you can’t shift resources easily—leading to waste and bottlenecks. - Scalability Challenges

Scaling SAN, NAS and Object storage independently requires different tools, processes, and sometimes vendors. This not only adds cost but also disrupts operational agility. - Security & Compliance Gaps

Three systems mean three sets of security protocols and backup policies, which can result in inconsistent data protection strategies—and compliance risks. - Maintenance Overhead

Managing firmware updates, patches, and performance tuning on three different platforms increases operational overhead and downtime exposure. - Limited Visibility & Analytics

Siloed systems provide fragmented data insights, making it hard to get a unified view of storage health, capacity trends, and performance analytics. - Inflexibility for Hybrid Workloads

Today’s workloads span everything from VMs and containers to unstructured media. Traditional setups lack the flexibility to support both block, file, and object on the same architecture—without trade-offs.

The Advantages of Unified SAN, NAS and Object Storage

The concept of unified storage delivers a multitude of benefits that directly address the pain points of modern IT environments:

- Reduced Management Complexity: By consolidating SAN, NAS, and Object Storage into a single system, unified storage significantly simplifies management. Instead of juggling multiple interfaces and separate management tools, IT teams can oversee their entire storage infrastructure from a single pane of glass. This streamlines operations, reduces the learning curve for new administrators, and frees up valuable IT resources.

- Improved Operational Efficiency: A unified system leads to better resource utilization. Storage capacity can be dynamically allocated to either block, file or object workloads as needed, eliminating wasted space and optimizing performance. This flexibility ensures that your storage resources are always aligned with your business demands, leading to greater efficiency and cost savings.

- Elimination of Siloed Systems: The traditional approach of separate SAN, NAS and object storage creates data silos, making data sharing and collaboration challenging. A unified platform breaks down these barriers, fostering a more integrated and collaborative data environment. This is crucial for modern applications and workflows that often require access to both block and file data.

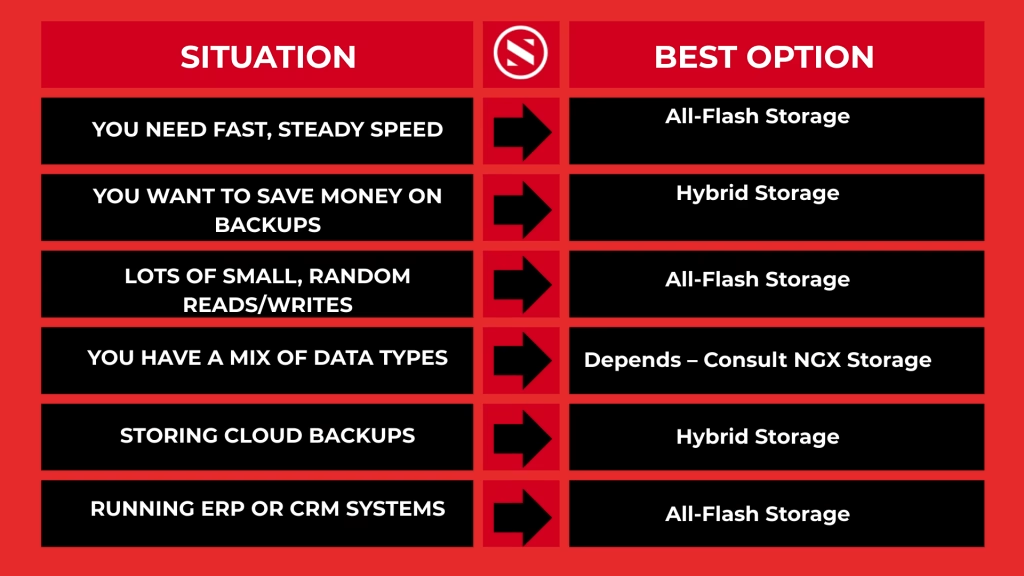

- Flexibility for Diverse Workloads: The ability to handle both block, file, and object data on a single platform provides unparalleled flexibility. You can support high-performance databases and virtual machines (block-level access) alongside large file shares and unstructured data (file-level access) and API driven object storage without compromising performance or efficiency. This adaptability makes unified storage an ideal solution for a wide range of enterprise and SMB workloads.

- Enhanced Performance and Reliability: Unified storage solutions are designed to ensure optimal performance for all workloads. Their architecture is built to deliver high throughput and low latency, crucial for demanding applications. The system's inherent reliability and data protection features further safeguard critical business data.

SAN vs NAS vs Object vs Unified

SAN (block): Low‑latency LUNs for databases/VMs.

NAS (file): NFS/SMB shares for collaboration and multi user access.

Object: Flat architecture for unstructured data with metadata.

Unified: One system exposing block + file + object with shared services, policy, and analytics.

NGX Storage: Delivering Unified Power

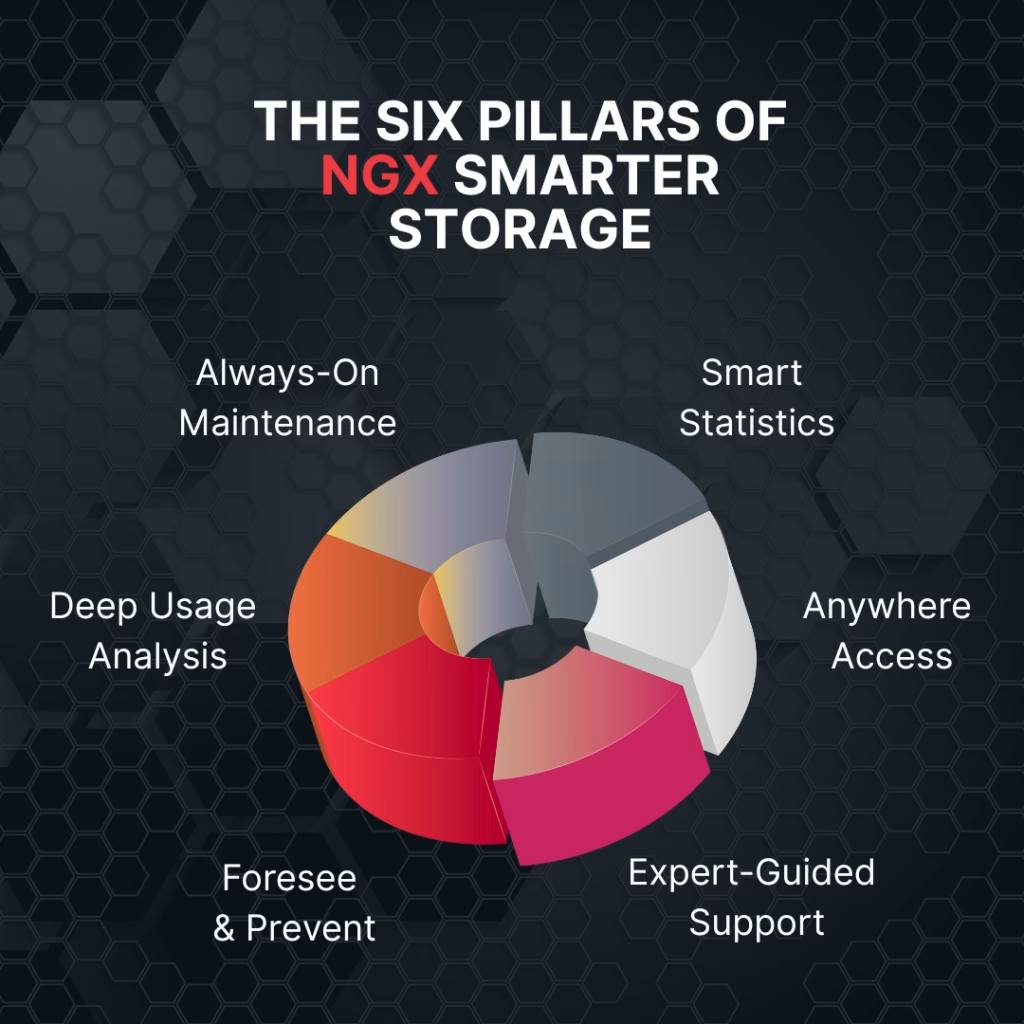

Recognizing the value of unified storage is an important first step. But true progress comes from aligning that understanding with a solution built on reliability, clarity, and purpose. NGX Storage brings those principles together in a system designed to meet the operational realities of modern IT environments.

Here’s how NGX contributes to a more cohesive and efficient storage infrastructure:

🔗 Unified Support for Block, File, and Object Protocols

NGX combines Fibre Channel, iSCSI, NFS, SMB, and S3 protocols into a single system. This allows you to support a wide range of workloads without maintaining separate infrastructure for each.

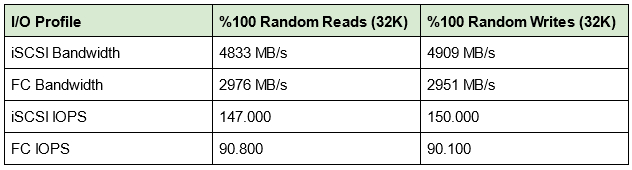

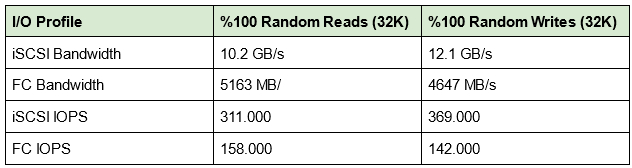

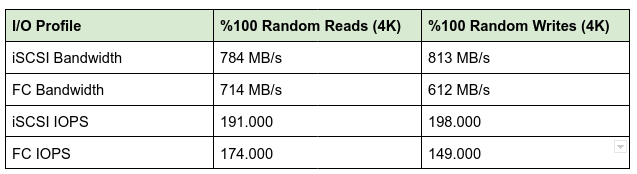

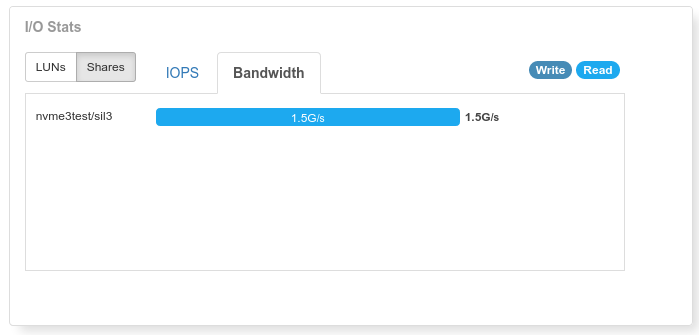

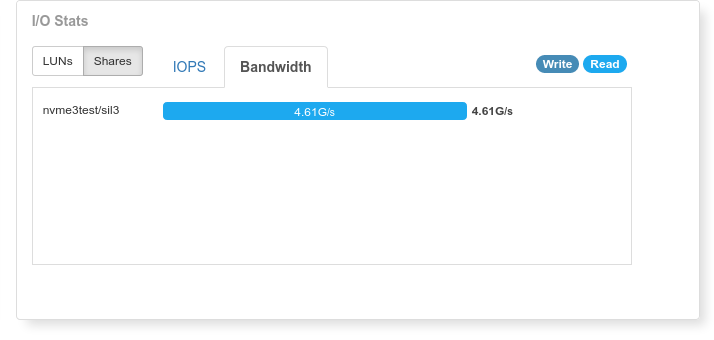

⚙️ Balanced Performance Across Workloads

Whether you're running high-throughput databases or managing large shared file environments, NGX is built to handle both efficiently—without forcing trade-offs between speed and stability.

📊 Intelligent Resource Management

Unified storage offers management with logical isolation and QoS to prevent noisy‑neighbour effects, ensuring that one doesn’t impact the other. Workloads remain consistent, with resources optimized based on usage patterns.

🖥️ Streamlined Interface for Everyday Tasks

NGX’s GUI is straightforward, enabling IT teams to create LUNs, file shares, and buckets quickly, monitor usage, and manage storage without complexity.

Next Step: Bring Clarity to Storage Complexity

Modern IT environments demand solutions that are both capable and clear. If managing separate systems has created more friction than value, it may be time for a change.

NGX Storage offers a thoughtful, unified approach—built not just to perform, but to simplify. No overhauls. No unnecessary complexity. Just a better way to manage what matters.

Explore your options with confidence. Reach out for a free consultation, request a low‑risk assessment and capacity plan, and see how NGX can help streamline your storage strategy.

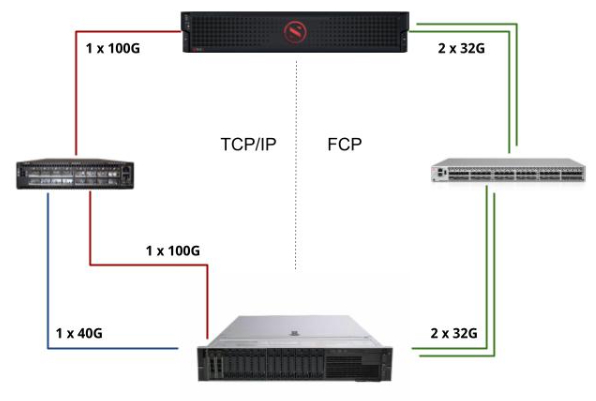

iSCSI vs FibreChannel Testbed

iSCSI vs FibreChannel Testbed