Choosing between all-flash and hybrid flash storage is a critical choice for any business that uses a lot of data. As we move deeper into 2025, understanding the pros and cons of each type helps IT teams make better decisions. This post will explain what each system (all-flash array & hybrid flash storage) does well and how NGX Storage can help boost your business through expert guidance, 7/24 fast support, and high-performance storage solutions.

What Is an All-Flash Storage (AFA)?

An all-flash storage array is a system that stores all data on solid-state drives (SSDs), typically NVMe or SAS. The absence of spinning disks ensures faster data access and reduced power consumption. Most AFAs use NVMe or SAS SSDs.

This system is built to give fast speed, quick access to data, and steady performance.

Advantages:

- Very fast (sub-millisecond delay)

- High speed read and write (IOPS)

- Uses less power and cooling

- Works great for AI, virtual desktops (VDI), and apps with heavy use

Drawbacks:

- Higher cost per TB

What is Hybrid Storage?

Hybrid storage systems use both solid-state drives (SSDs) and hard disk drives (HDDs). SSDs handle active or frequently used data, while HDDs store less-used data. This tiered model balances performance and cost-efficiency, making it attractive for organizations with lots of different data types.

Advantages:

- More affordable for large data needs

- Sufficient performance for mid-tier workloads

Drawbacks:

- Slower and less steady performance than all-flash systems

- Consumes more energy and has higher cooling costs, which increases overall expenses.

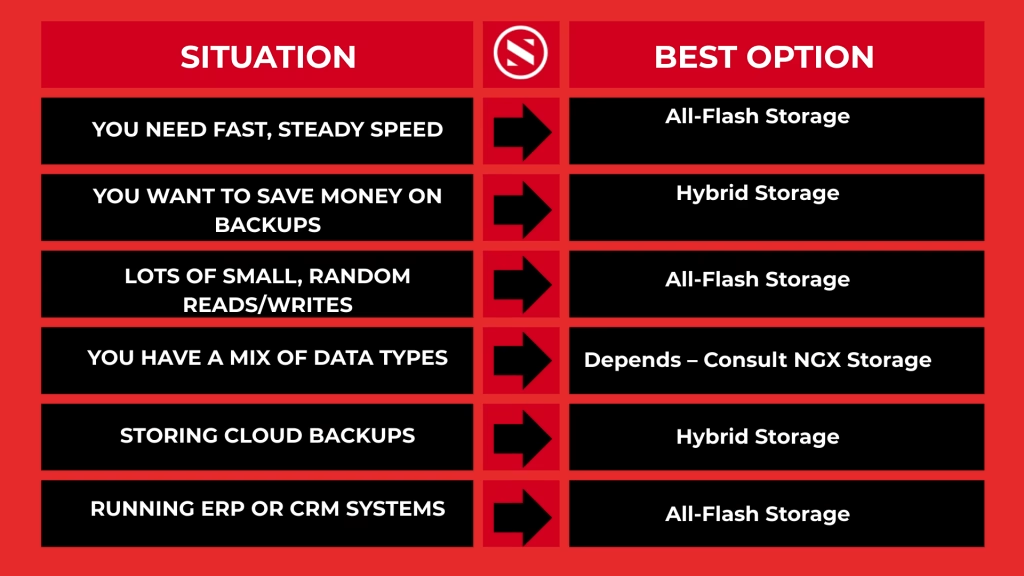

All-flash Array vs. Hybrid Flash Storage: Which One Is Right For You?

All-Flash Storage vs. Hybrid Storage: When to Choose Which?

How NGX Storage Makes Storage Even Better

At NGX Storage, we’ve taken hybrid flash storage to the next level with a smart and powerful design.

Our system uses DRAM-first architecture, which means it stores most recent data in super-fast memory — up to 8TB of DRAM cache. This helps your apps run faster and smoother, with ultra-low latency.

We also use a unique method called Random Flash Sequential Disk. Here's how it works:

- Random data goes to high-speed flash (SSD)

- Sequential data goes to large-capacity hard drives (HDD)

This smart flow gives you the speed of flash with the cost savings of disk, all in one system.

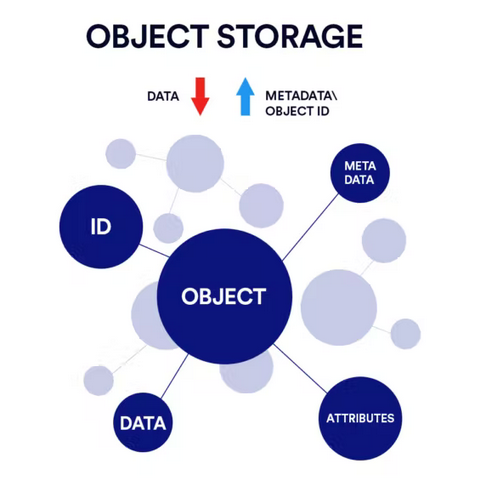

NGX also uses a flash tier to store important metadata, like a smart index that helps the system find files quickly. By keeping this info in fast SSDs, apps load faster and storage stays responsive, even during heavy use. This works hand in hand with our DRAM cache to give you smooth, high-speed performance every time.

Why choose NGX?

- ⚡ Lightning-fast performance where it matters.

- 📉 Petabyte-scale performance, without overspending.

- 🤝 Trusted expertise with rapid-response support you can count on.

- 🚀 Engineered for excellence. Trusted by industry leaders powering mission-critical operations.

Whether you're powering databases, backups, or cloud systems — NGX ensures enterprise-class reliability and speed backed by expert support, without compromise.

Next Steps

Want to experience how NGX combines speed, smart caching, and scale in one powerful system? Contact our team today to book a live demo here and discover how NGX can transform your storage infrastructure with performance you can feel.

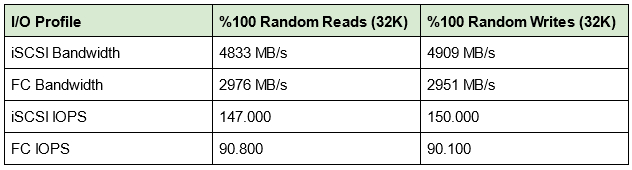

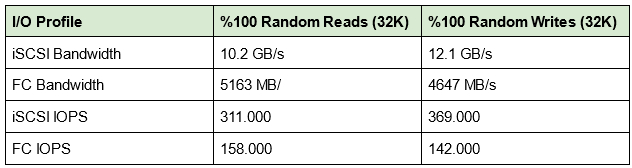

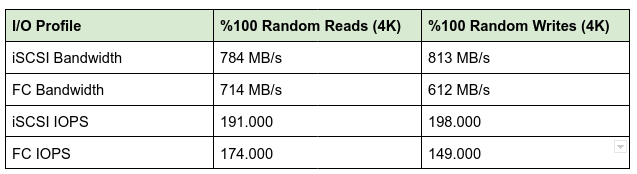

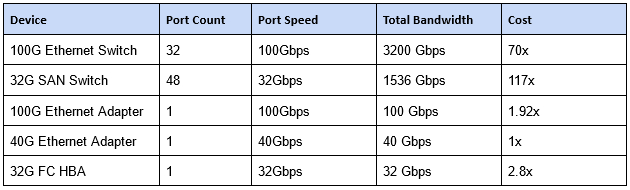

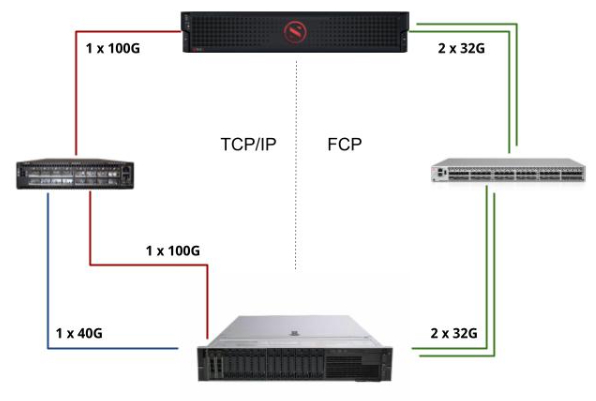

iSCSI vs FibreChannel Testbed

iSCSI vs FibreChannel Testbed