Optimizing Network File System (NFS) performance can be a challenging task that requires a nuanced understanding of protocol dynamics. The main challenge is using a standard TCP socket for communication between the client and server, which adds complexity to the protocol stack.

Even when network links have substantial bandwidth headroom, NFS client performance tends to plateau. Read operations achieve throughputs of around 2GB/sec, while write operations achieve throughputs of around 1.2GB/sec on a single mount. The performance bottleneck is not caused by traditional suspects such as operating system or CPU limitations, but rather by the complexities intrinsic to the NFS protocol stack.

Linux now has a new feature called 'nconnect' which allows for multiple TCP connections to be established for a single NFS mount. By setting nconnect as a mount option, the NFS client can open multiple transport connections for the same host. This feature is included in Linux kernel versions 5.3 and above.

Using 'nconnect' is sama as other options;

mount -t nfs -o rw,nconnect=16 192.168.1.1:/exports/ngxpool/share /mnt

Let's examine a performance comparison from a single host using NGX Storage:

Our test setup involves a single client with a 40 Gbit ethernet connection connected to a switch for NFS testing. This configuration aims to assess system performance and data exchange capabilities in a straightforward manner.

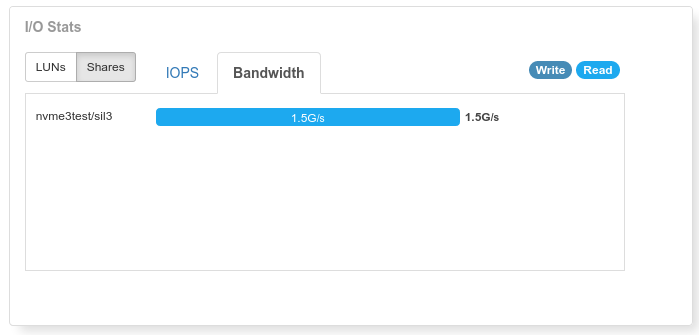

Case 1: Without "nconnect"

root@proxmox:~# fio --rw=read --ioengine=libaio --name=test_nconncect-1 --size=20g --direct=1 --invalidate=1 --fsync_on_close=1 --norandommap --group_reporting --exitall --runtime=60 --time_based --iodepth=128 --numjobs=1 --bs=128k --filename=/mnt/test

test_nconncect-1: (g=0): rw=read, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=128

fio-3.25

Starting 1 process

Jobs: 1 (f=1): [R(1)][100.0%][r=1221MiB/s][r=9767 IOPS][eta 00m:00s]

test_nconncect-1: (groupid=0, jobs=1): err= 0: pid=60345: Fri Feb 2 17:04:57 2024

read: IOPS=11.0k, BW=1496MiB/s (1568MB/s)(87.7GiB/60012msec)

slat (usec): min=12, max=286, avg=24.59, stdev= 9.19

clat (usec): min=2824, max=28405, avg=10670.42, stdev=1509.28

lat (usec): min=2892, max=28439, avg=10695.08, stdev=1509.38

clat percentiles (usec):

| 1.00th=[ 8717], 5.00th=[ 8979], 10.00th=[ 9110], 20.00th=[ 9372],

| 30.00th=[ 9634], 40.00th=[ 9896], 50.00th=[10159], 60.00th=[10552],

| 70.00th=[11207], 80.00th=[12125], 90.00th=[13173], 95.00th=[13304],

| 99.00th=[14615], 99.50th=[15139], 99.90th=[16581], 99.95th=[17433],

| 99.99th=[23462]

bw ( MiB/s): min= 1189, max= 1577, per=100.00%, avg=1495.81, stdev=119.18, samples=120

iops : min= 9518, max=12618, avg=11966.47, stdev=953.42, samples=120

lat (msec) : 4=0.01%, 10=42.86%, 20=57.11%, 50=0.03%

cpu : usr=3.26%, sys=31.89%, ctx=715792, majf=0, minf=4112

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwts: total=718115,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

READ: bw=1496MiB/s (1568MB/s), 1496MiB/s-1496MiB/s (1568MB/s-1568MB/s), io=87.7GiB (94.1GB), run=60012-60012msec

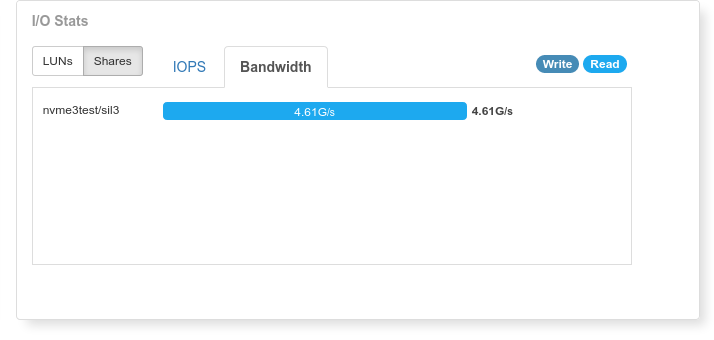

Case 2: With "nconnect"

root@proxmox:~# fio --rw=read --ioengine=libaio --name=test_nconncect-16 --size=20g --direct=1 --invalidate=1 --fsync_on_close=1 --norandommap --group_reporting --exitall --runtime=60 --time_based --iodepth=128 --numjobs=2 --bs=128k --filename=/mnt/test

test_nconncect-16: (g=0): rw=read, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=128

...

fio-3.25

Starting 2 processes

Jobs: 2 (f=2): [R(2)][100.0%][r=4707MiB/s][r=37.7k IOPS][eta 00m:00s]

test_nconncect-1: (groupid=0, jobs=2): err= 0: pid=66333: Fri Feb 2 17:45:55 2024

read: IOPS=37.6k, BW=4705MiB/s (4934MB/s)(276GiB/60007msec)

slat (usec): min=9, max=849, avg=25.58, stdev=11.04

clat (usec): min=2452, max=18476, avg=6774.13, stdev=1787.08

lat (usec): min=2470, max=18507, avg=6799.76, stdev=1787.05

clat percentiles (usec):

| 1.00th=[ 5276], 5.00th=[ 5473], 10.00th=[ 5538], 20.00th=[ 5604],

| 30.00th=[ 5735], 40.00th=[ 5800], 50.00th=[ 5866], 60.00th=[ 6063],

| 70.00th=[ 7308], 80.00th=[ 7570], 90.00th=[11076], 95.00th=[11338],

| 99.00th=[11600], 99.50th=[11731], 99.90th=[11863], 99.95th=[11994],

| 99.99th=[12125]

bw ( MiB/s): min= 4683, max= 4712, per=100.00%, avg=4706.41, stdev= 1.68, samples=238

iops : min=37466, max=37700, avg=37651.29, stdev=13.48, samples=238

lat (msec) : 4=0.01%, 10=88.73%, 20=11.26%

cpu : usr=2.67%, sys=50.41%, ctx=994400, majf=0, minf=20609

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwts: total=2258833,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

READ: bw=4705MiB/s (4934MB/s), 4705MiB/s-4705MiB/s (4934MB/s-4934MB/s), io=276GiB (296GB), run=60007-60007msec

In conclusion, implementation of the 'nconnect' feature in Linux kernel 5.3 and above serves as a functional solution, allowing for multiple TCP connections for a single NFS mount. The assessment, based on fio tests and NGX Storage metrics, underscores 'nconnect' as a practical tool for enhancing performance.

NOTE: Do not enable blindly this feature ! Read your NFS client documentation to confirm whether nconnect is supported in your client version and their best practises.